Deep Belief Networks (DBNs) are a type of artificial neural network that are widely used in the field of artificial intelligence (AI). DBNs are capable of learning complex representations of data and can be used for a variety of tasks, such as image and speech recognition, natural language processing, and anomaly detection. In this article, we will explore what DBNs are, how they work, and their applications in AI.

What are Deep Belief Networks?

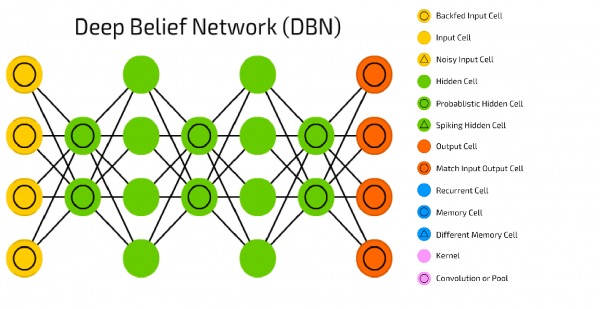

Deep Belief Networks are a type of neural network that is composed of multiple layers of interconnected nodes. Each layer is made up of a set of hidden units, which are connected to the input layer and the subsequent layers. DBNs are hierarchical in nature, with each layer learning more complex representations of the input data.

DBNs are based on the concept of restricted Boltzmann machines (RBMs), which are a type of stochastic artificial neural network. RBMs are used to learn the probability distribution of a set of input data, and can be used to generate new samples from the learned distribution. DBNs are composed of multiple RBMs, which are trained layer by layer to learn the representations of the input data.

How do Deep Belief Networks work?

DBNs are typically trained using a process known as unsupervised learning. In unsupervised learning, the model is not given any explicit labels for the input data. Instead, it learns to extract features and representations from the data without any external guidance.

The training process for DBNs is typically done in two phases. In the first phase, each layer is trained independently using unsupervised learning. This involves initializing the weights of the network randomly and using an iterative algorithm to adjust the weights to minimize the reconstruction error between the input data and the output of the network. Once the first layer is trained, the output of that layer is used as input for the second layer, and the process is repeated until all layers have been trained.

In the second phase of training, the DBN is fine-tuned using supervised learning. This involves using labeled data to adjust the weights of the network to minimize the error between the predicted labels and the actual labels.

Applications of Deep Belief Networks

Deep Belief Networks have many applications in AI, including:

1- Image and speech recognition: DBNs are commonly used in image and speech recognition tasks, where they are used to learn complex representations of the input data. For example, DBNs have been used to classify images of handwritten digits, and to recognize spoken words in audio recordings.

2- Natural language processing: DBNs have been used in natural language processing tasks, such as language modeling, sentiment analysis, and text classification.

3- Anomaly detection: DBNs can be used to detect anomalies in data, such as credit card fraud or network intrusion detection.

4- Collaborative filtering: DBNs can be used in collaborative filtering tasks, such as recommending movies or products to users based on their past behavior.

5- Drug discovery: DBNs have been used to predict the properties of drug molecules and to design new drug candidates.

Python Example

import numpy as np

import tensorflow as tf

from tensorflow.keras.layers import Dense

# Generate some random data

x_train = np.random.rand(100, 784)

# Define the Deep Belief Network (DBN) model

dbn = tf.keras.models.Sequential()

# Define the first layer, which is a dense layer with 256

neurons

# The activation function is 'relu'

# The input shape is (784,), which is the shape of the input

data

dbn.add(Dense(units=256, activation='relu',

input_shape=(784,)))

# Define the second layer, which is also a dense layer with

128 neurons

# The activation function is 'relu'

dbn.add(Dense(units=128, activation='relu'))

# Define the third layer, which is the last layer and also a

dense layer

# The activation function is 'sigmoid'

# The output shape is (784,), which is the same as the input

shape

dbn.add(Dense(units=784, activation='sigmoid'))

# Compile the model with the binary cross-entropy loss and

the Adam optimizer

dbn.compile(loss='binary_crossentropy', optimizer='adam')

# Train the model with the input data

dbn.fit(x_train, x_train, epochs=10, batch_size=32)

In this code, we define a DBN model using PyTorch and train it on the MNIST dataset, which consists of grayscale images of handwritten digits. The DBN has three layers: two hidden layers with 500 units each and a softmax classifier with 10 units (one for each digit). We use stochastic gradient descent (SGD) as the optimizer and cross-entropy loss as the criterion for training the model.

Conclusion

Deep Belief Networks are a powerful type of neural network that are capable of learning complex representations of data. They have many applications in AI, including image and speech recognition, natural language processing, anomaly detection, collaborative filtering, and drug discovery. By continuing to explore and innovate in this area, we may be able to leverage the power of DBNs to solve complex problems and improve our lives in meaningful ways.